Mainframe Data Lineage: The Bridge Between Managing Today and Modernizing Tomorrow

Mainframes aren't going anywhere overnight. Despite the industry's push toward cloud migration and modernization, the reality is that many financial institutions still rely on mainframe systems to process millions of daily transactions, calculate interest accruals, manage account records, and run core business operations. And they will for years to come.

Modernization is the eventual reality for every organization still running on mainframe. But "eventual" is doing a lot of heavy lifting in that sentence. For many financial institutions, a full modernization effort is on the roadmap but years away — dependent on budget cycles, vendor timelines, regulatory considerations, and a hundred other competing priorities. In the meantime, these systems still need to be maintained — and that's where things get increasingly risky.

The hidden cost of "just making a change"

When a business requirement changes — say, a new regulation requires a different calculation methodology, or a product team needs to update how accrued interest is computed — someone has to go into the mainframe and update the code. Sounds straightforward enough. Except it's not.

Mainframe COBOL codebases are often decades old. They've been written, rewritten, and patched by generations of engineers, many of whom have long since left the organization. A single mainframe environment can contain tens of thousands of COBOL modules, each with hundreds or thousands of lines of code. Variables branch across modules. Tables are read and updated in ways that aren't always documented. Conditional logic sends data down different paths depending on record types, dates, or account classifications that may have made perfect sense in 1998 but aren't intuitive to anyone working today.

Before a mainframe engineer can write a single new line of code, they need to answer a deceptively simple question: What will this change affect?

And answering that question — tracing a variable backward through modules, understanding which tables get updated, identifying upstream and downstream dependencies — can take weeks or even months of manual investigation. One engineer we've worked with estimated that investigating the impact of a change takes substantially longer than actually making the change.

Why mainframe management feels like navigating a black box

The term "black box" gets used a lot in mainframe conversations, and for good reason. The challenge isn't that the code doesn't work — it usually works remarkably well. The challenge is that nobody fully understands how and why it works the way it does.

Consider what a typical investigation looks like without modern tooling. An engineer receives a request from the business: "We need to update how we calculate X." To comply, that engineer has to:

- Determine a relevant starting point for researching “X”, which may be a business term or a system term. This starting point, for example, could be a system variable in a frequently accessed COBOL module

- Open the relevant COBOL module (which might be thousands of lines long)

- Find and trace the variable in question through the code

- Identify every table and field it touches

- Follow it across modules when it gets called or referenced elsewhere, keeping track of pathways where the variable may take on a new name

- Map out conditional branching logic that might treat the variable differently based on account type, date ranges, or other factors

- Determine which downstream processes depend on the output

- Document all of this before they can even begin to assess whether the change is safe to make

Now multiply that by the reality that a single environment might have 50,000 to 500,000 to 5,000,000 modules. It's not hard to see why organizations describe their mainframe as a black box — and why changes feel so high-stakes.

The real risk: unintended consequences

The fear isn't hypothetical. When an engineer updates a module without fully understanding the dependencies, the consequences can ripple across systems. A calculation that looked isolated might feed into downstream reporting. A field that seemed unused might actually be read by another module under specific conditions. A change to one branch of conditional logic might alter outputs for an account type that wasn't part of the original requirement.

These kinds of unintended consequences don't always surface immediately. Sometimes they show up in reconciliation discrepancies weeks later. Sometimes a client calls and says, "My statement looks different this month." By that point, the investigation to find the root cause is just as painful as the original change — if not more so.

This is why many mainframe teams default to a conservative posture. They move slowly, pad timelines, and layer in extensive manual review. Not because they aren't skilled, but because the risk of getting it wrong is too high and the tools available to them haven't evolved with the complexity of the systems they manage.

A better approach: data lineage for mainframe management

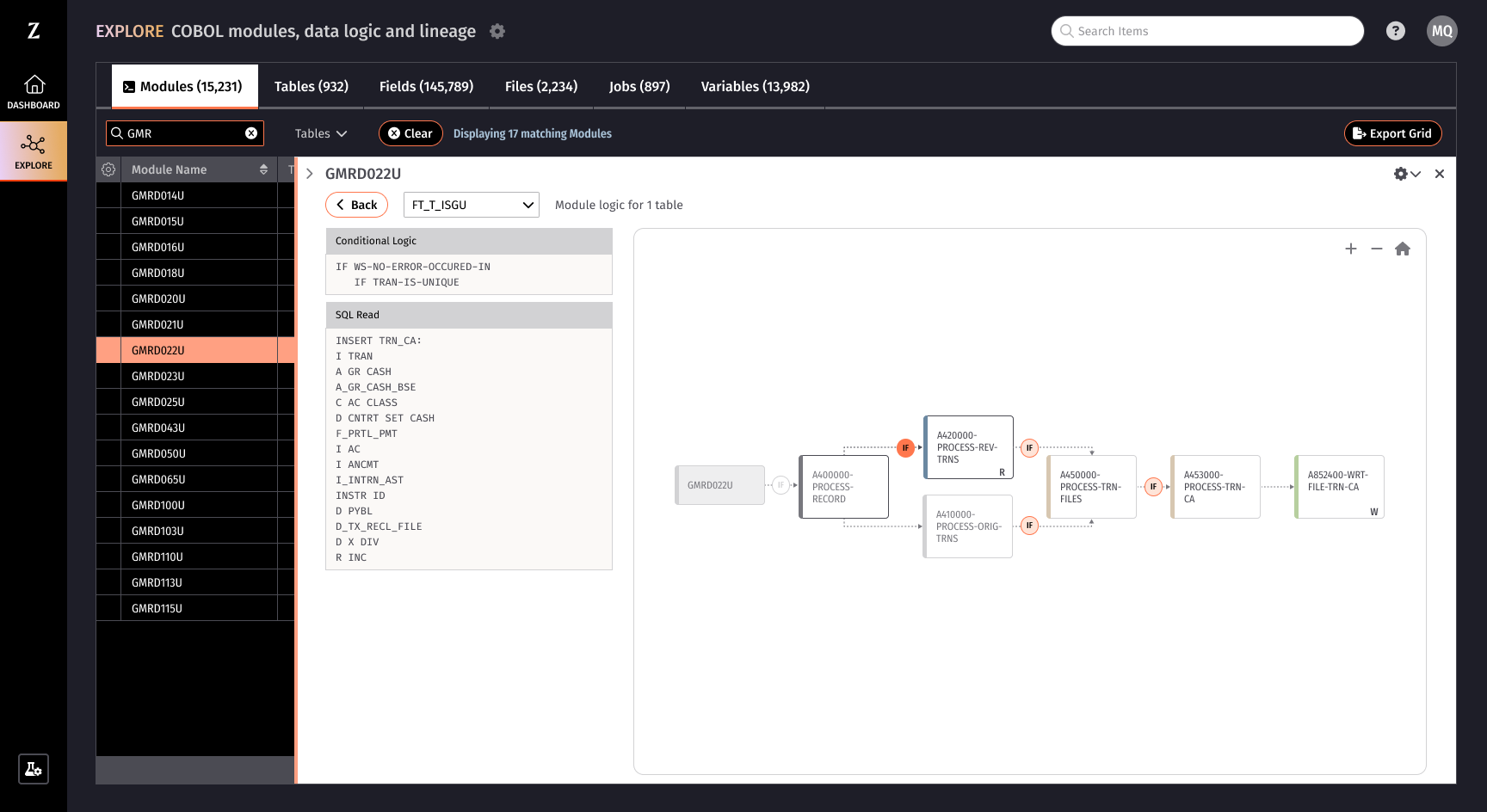

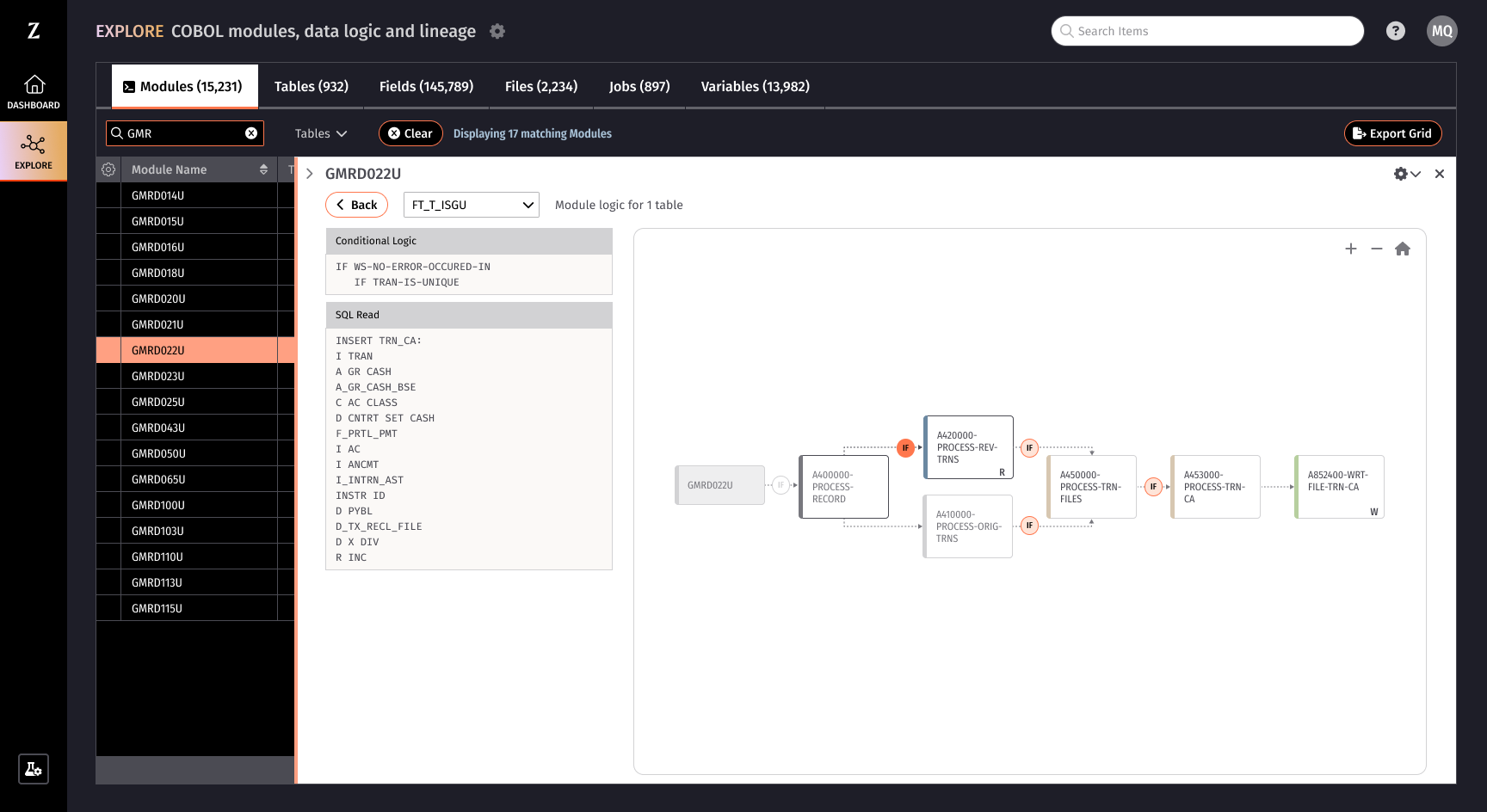

This is where mainframe data lineage changes the equation. Rather than manually tracing code paths and building dependency maps from scratch every time a change is requested, data lineage technology can parse COBOL modules at scale and generate a comprehensive, searchable view of how data flows through the system.

With data lineage in place, that same engineer who used to spend months investigating a change can now:

- Search for a specific variable, table, or field and immediately see every module that reads, writes, or updates it

- Trace the data path forward and backward to understand exactly where a value originates and where it ends up

- View calculation logic to understand the mathematical expressions and business rules embedded in the code

- Identify conditional branching to see where and why data gets treated differently based on record types or other criteria

- Understand cross-module dependencies to assess the full blast radius of a proposed change before making it

Instead of navigating thousands of lines of raw COBOL to answer a single question, the engineer gets a curated, structured view of exactly the information they need. The investigation that used to take months can happen in minutes.

Not just for modernization day — for every day between now and then

Much of the conversation around mainframe data lineage focuses on migration and modernization. And yes, lineage is critical for those efforts — but the value starts long before modernization kicks off.

Every time a business requirement changes, every time a regulation is updated, every time an engineer needs to write or modify code — they're navigating the same black box. Data lineage doesn't just prepare you for the future. It makes your mainframe safer and more manageable right now, during the months or years between today and the day you're ready to modernize.

For mainframe teams, it means less time investigating and more time executing. For risk and compliance leaders, it means greater confidence that changes won't introduce unintended consequences. For the business, it means faster turnaround on change requests without increasing operational risk.

And when modernization day does arrive, you'll be ready

Here's the other advantage of investing in data lineage now: when your organization is ready to modernize, you won't be starting from scratch.

Modernization isn't just about moving everything from the old system to the new one. It requires making deliberate decisions about what to bring forward and what to leave behind. Which business rules are still relevant? Which calculations need to be replicated exactly, and which should be redesigned? Which data paths reflect current requirements, and which are artifacts of decisions made decades ago?

Without lineage, those questions send teams back into the same manual investigation cycle — except now they're doing it across tens of thousands of modules under the pressure of a migration timeline. With lineage already in place, your team walks into modernization with a comprehensive understanding of how the current system works, what it does, and why.

And the value doesn't stop at cutover. Post-migration, lineage gives you a baseline for reconciliation. When the new system produces a different output than the old one — and it will — lineage helps you trace back to the original logic and understand why the results differ. Was it an intentional change? A missed business rule? A calculation that was carried over incorrectly? Instead of guessing, your team can pinpoint the source of the discrepancy and resolve it with confidence.

The mainframe isn't the problem. The lack of visibility is.

Organizations that rely on mainframes aren't behind — they're running proven, reliable infrastructure that processes critical transactions every day. The challenge has never been the mainframe itself. It's that the tools and processes for understanding what's inside it haven't kept pace with the complexity of the systems or the speed at which the business needs to evolve.

Data lineage closes that gap. Whether modernization is two years away or five, understanding what's inside the black box isn't something you can afford to wait on. Your teams need that visibility today to manage changes safely — and they'll need it even more when the time comes to move forward.

Zengines' Mainframe Data Lineage solution parses COBOL code at scale to give your team searchable, visual access to the data paths, calculation logic, dependencies, and business rules embedded in your mainframe.

You may also like

Mainframes aren't going anywhere overnight. Despite the industry's push toward cloud migration and modernization, the reality is that many financial institutions still rely on mainframe systems to process millions of daily transactions, calculate interest accruals, manage account records, and run core business operations. And they will for years to come.

Modernization is the eventual reality for every organization still running on mainframe. But "eventual" is doing a lot of heavy lifting in that sentence. For many financial institutions, a full modernization effort is on the roadmap but years away — dependent on budget cycles, vendor timelines, regulatory considerations, and a hundred other competing priorities. In the meantime, these systems still need to be maintained — and that's where things get increasingly risky.

The hidden cost of "just making a change"

When a business requirement changes — say, a new regulation requires a different calculation methodology, or a product team needs to update how accrued interest is computed — someone has to go into the mainframe and update the code. Sounds straightforward enough. Except it's not.

Mainframe COBOL codebases are often decades old. They've been written, rewritten, and patched by generations of engineers, many of whom have long since left the organization. A single mainframe environment can contain tens of thousands of COBOL modules, each with hundreds or thousands of lines of code. Variables branch across modules. Tables are read and updated in ways that aren't always documented. Conditional logic sends data down different paths depending on record types, dates, or account classifications that may have made perfect sense in 1998 but aren't intuitive to anyone working today.

Before a mainframe engineer can write a single new line of code, they need to answer a deceptively simple question: What will this change affect?

And answering that question — tracing a variable backward through modules, understanding which tables get updated, identifying upstream and downstream dependencies — can take weeks or even months of manual investigation. One engineer we've worked with estimated that investigating the impact of a change takes substantially longer than actually making the change.

Why mainframe management feels like navigating a black box

The term "black box" gets used a lot in mainframe conversations, and for good reason. The challenge isn't that the code doesn't work — it usually works remarkably well. The challenge is that nobody fully understands how and why it works the way it does.

Consider what a typical investigation looks like without modern tooling. An engineer receives a request from the business: "We need to update how we calculate X." To comply, that engineer has to:

- Determine a relevant starting point for researching “X”, which may be a business term or a system term. This starting point, for example, could be a system variable in a frequently accessed COBOL module

- Open the relevant COBOL module (which might be thousands of lines long)

- Find and trace the variable in question through the code

- Identify every table and field it touches

- Follow it across modules when it gets called or referenced elsewhere, keeping track of pathways where the variable may take on a new name

- Map out conditional branching logic that might treat the variable differently based on account type, date ranges, or other factors

- Determine which downstream processes depend on the output

- Document all of this before they can even begin to assess whether the change is safe to make

Now multiply that by the reality that a single environment might have 50,000 to 500,000 to 5,000,000 modules. It's not hard to see why organizations describe their mainframe as a black box — and why changes feel so high-stakes.

The real risk: unintended consequences

The fear isn't hypothetical. When an engineer updates a module without fully understanding the dependencies, the consequences can ripple across systems. A calculation that looked isolated might feed into downstream reporting. A field that seemed unused might actually be read by another module under specific conditions. A change to one branch of conditional logic might alter outputs for an account type that wasn't part of the original requirement.

These kinds of unintended consequences don't always surface immediately. Sometimes they show up in reconciliation discrepancies weeks later. Sometimes a client calls and says, "My statement looks different this month." By that point, the investigation to find the root cause is just as painful as the original change — if not more so.

This is why many mainframe teams default to a conservative posture. They move slowly, pad timelines, and layer in extensive manual review. Not because they aren't skilled, but because the risk of getting it wrong is too high and the tools available to them haven't evolved with the complexity of the systems they manage.

A better approach: data lineage for mainframe management

This is where mainframe data lineage changes the equation. Rather than manually tracing code paths and building dependency maps from scratch every time a change is requested, data lineage technology can parse COBOL modules at scale and generate a comprehensive, searchable view of how data flows through the system.

With data lineage in place, that same engineer who used to spend months investigating a change can now:

- Search for a specific variable, table, or field and immediately see every module that reads, writes, or updates it

- Trace the data path forward and backward to understand exactly where a value originates and where it ends up

- View calculation logic to understand the mathematical expressions and business rules embedded in the code

- Identify conditional branching to see where and why data gets treated differently based on record types or other criteria

- Understand cross-module dependencies to assess the full blast radius of a proposed change before making it

Instead of navigating thousands of lines of raw COBOL to answer a single question, the engineer gets a curated, structured view of exactly the information they need. The investigation that used to take months can happen in minutes.

Not just for modernization day — for every day between now and then

Much of the conversation around mainframe data lineage focuses on migration and modernization. And yes, lineage is critical for those efforts — but the value starts long before modernization kicks off.

Every time a business requirement changes, every time a regulation is updated, every time an engineer needs to write or modify code — they're navigating the same black box. Data lineage doesn't just prepare you for the future. It makes your mainframe safer and more manageable right now, during the months or years between today and the day you're ready to modernize.

For mainframe teams, it means less time investigating and more time executing. For risk and compliance leaders, it means greater confidence that changes won't introduce unintended consequences. For the business, it means faster turnaround on change requests without increasing operational risk.

And when modernization day does arrive, you'll be ready

Here's the other advantage of investing in data lineage now: when your organization is ready to modernize, you won't be starting from scratch.

Modernization isn't just about moving everything from the old system to the new one. It requires making deliberate decisions about what to bring forward and what to leave behind. Which business rules are still relevant? Which calculations need to be replicated exactly, and which should be redesigned? Which data paths reflect current requirements, and which are artifacts of decisions made decades ago?

Without lineage, those questions send teams back into the same manual investigation cycle — except now they're doing it across tens of thousands of modules under the pressure of a migration timeline. With lineage already in place, your team walks into modernization with a comprehensive understanding of how the current system works, what it does, and why.

And the value doesn't stop at cutover. Post-migration, lineage gives you a baseline for reconciliation. When the new system produces a different output than the old one — and it will — lineage helps you trace back to the original logic and understand why the results differ. Was it an intentional change? A missed business rule? A calculation that was carried over incorrectly? Instead of guessing, your team can pinpoint the source of the discrepancy and resolve it with confidence.

The mainframe isn't the problem. The lack of visibility is.

Organizations that rely on mainframes aren't behind — they're running proven, reliable infrastructure that processes critical transactions every day. The challenge has never been the mainframe itself. It's that the tools and processes for understanding what's inside it haven't kept pace with the complexity of the systems or the speed at which the business needs to evolve.

Data lineage closes that gap. Whether modernization is two years away or five, understanding what's inside the black box isn't something you can afford to wait on. Your teams need that visibility today to manage changes safely — and they'll need it even more when the time comes to move forward.

Zengines' Mainframe Data Lineage solution parses COBOL code at scale to give your team searchable, visual access to the data paths, calculation logic, dependencies, and business rules embedded in your mainframe.

For Chief Risk Officers and Chief Compliance Officers at insurance carriers, ORSA season brings a familiar tension: demonstrating that your organization truly understands its risk exposure -- while knowing that critical calculations still run through systems nobody fully understands anymore.

The Own Risk and Solvency Assessment (ORSA) isn't just paperwork. It's a commitment to regulators that you can trace how capital adequacy gets calculated, where stress test assumptions originate, and why your models produce the outputs they do. For carriers still running policy administration, actuarial calculations, or claims processing on legacy mainframes, that commitment gets harder to keep every year.

The Documentation Problem Nobody Talks About

Most large insurers have mainframe systems that have been running -- and evolving -- for 30, 40, even 50+ years. The original architects retired decades ago. The business logic is encoded in millions of lines of COBOL across thousands of modules. And the documentation? It hasn’t been updated in years.

This creates a specific problem for ORSA compliance: when regulators ask how a particular reserve calculation works, or where a risk factor originates, the honest answer is often "we'd need to trace it through the code."

That trace can take weeks. Sometimes months. And even then, you're relying on the handful of mainframe specialists who can actually read the logic -- specialists who are increasingly close to retirement themselves.

What Regulators Actually Want to See

ORSA requires carriers to demonstrate effective risk management governance. In practice, that means showing:

- Data lineage: Where do the inputs to your risk models actually come from? Which systems touch them along the way?

- Calculation transparency: How does a policy record become a reserve estimate? What business rules apply?

- Change traceability: When you modify a calculation, what downstream impacts does that create?

For modern cloud-based systems, this is straightforward. Metadata catalogs, audit logs, and documentation are built in. But for mainframe systems -- where the business logic is the documentation, buried in procedural code -- this level of transparency requires actual investigation.

The Regulatory Fire Drill

Every CRO knows the scenario: an examiner asks a pointed question about a specific calculation. Your team scrambles to trace it back through the systems. The mainframe team pulls in their most senior developer (who was already over-allocated with other work). Days pass. The answer finally emerges -- but the process exposed just how fragile your institutional knowledge has become.

These fire drills are getting more frequent, not less. Regulators have become more sophisticated about data governance expectations. And the talent pool that understands legacy COBOL systems shrinks every year.

The question isn't whether you'll face this challenge. It's whether you'll face it reactively -- during an exam -- or proactively, on your own timeline.

Extracting Lineage from Legacy Systems

The good news: you don't have to modernize your entire core system to solve the documentation problem. New AI-powered tools can parse legacy codebases and extract the data lineage that's been locked inside for decades.

This means:

- Automated tracing of how data flows through COBOL/RPG modules, job schedulers, and database schemas

- Visual mapping of calculation logic, branching conditions, and downstream dependencies

- Searchable documentation that lets compliance teams answer regulator questions in hours instead of weeks

- Preserved institutional knowledge that doesn't walk out the door when your mainframe experts retire

The goal isn't to replace your legacy systems overnight. It's to shine a light into the black box -- so you can demonstrate governance and control over systems that still run critical functions.

From Reactive to Proactive

The carriers who navigate ORSA most smoothly aren't the ones with the newest technology. They're the ones who can clearly articulate how their risk management processes work -- including the parts that run on 40-year-old infrastructure.

That clarity doesn't require a multi-year modernization program. It requires the ability to extract and visualize what your systems already do, in a format that satisfies both internal governance requirements and external regulatory scrutiny.

For CROs and CCOs managing legacy technology estates, that capability is becoming less of a nice-to-have and more of a prerequisite for confident compliance.

Zengines helps insurance carriers extract data lineage and governance controls from legacy mainframe systems. Our AI-powered platform parses COBOL code and related infrastructure to deliver the transparency regulators expect -- without requiring a rip-and-replace modernization.

TL;DR: The Quick Answer

LLM code analysis tools like ChatGPT and Copilot excel at explaining and translating specific COBOL programs you've already identified. Mainframe data lineage platforms like Zengines excel at discovering business logic across thousands of programs when you don't know where to look. Most enterprise modernization initiatives need both: data lineage to find what matters, LLMs to accelerate the work once you've found it.

---------------

When enterprises tackle mainframe modernization and legacy COBOL code analysis, two technologies dominate the conversation: Large Language Models (LLMs) and mainframe data lineage platforms. Both promise to reveal what your code does—but they solve fundamentally different problems.

LLMs like ChatGPT, GitHub Copilot, and IBM watsonx Code Assistant excel at interpreting and translating code you paste into them. Data lineage platforms like Zengines excel at discovering and extracting business logic across enterprise codebases—often millions of lines of COBOL—when you don't know where that logic lives.

Understanding this distinction determines whether your modernization initiative succeeds or stalls. This guide clarifies when each approach fits your actual need.

What LLMs and Data Lineage Platforms Actually Do

LLM code analysis tools provide deep explanations of specific code. They rewrite programs in modern languages, optimize algorithms, and tutor developers. If you know which program to analyze, LLMs accelerate understanding and translation.

Mainframe data lineage platforms find business logic you didn't know existed. They search across thousands of programs, extract calculations and conditions at enterprise scale, and prove completeness for regulatory compliance like BCBS-239.

The overlap matters: Both can show you what calculations do. The critical difference is scale and discovery. Zengines extracts calculation logic from anywhere in your codebase without knowing where to look. LLMs explain and transform specific code once you identify it.

Most enterprise teams need both: data lineage to discover scope and extract system-wide business logic, LLMs to accelerate understanding and translation of specific programs.

How Each Tool "Shows You How Code Works"

The phrase "shows you how code works" means different things for each tool—and the distinction matters for mainframe modernization projects.

Traditional (schema-based) lineage tools show that Field A flows to Field B, but not what happens during that transformation. They map connections without revealing logic.

Code-based lineage platforms like Zengines extract the actual calculation:

PREMIUM = BASE_RATE * RISK_FACTOR * (1 + ADJUSTMENT)

...along with the conditions that govern when it applies:

IF CUSTOMER_TYPE = 'COMMERCIAL' AND REGION = 'EU'

This reveals business rules governing when logic applies across your entire system.

LLMs explain code line-by-line, clarify algorithmic intent, suggest optimizations, and generate alternatives—but only for code you paste into them.

The key difference: Zengines shows you calculations across 5,000 programs without needing to know where to look. LLMs explain calculations in depth once you know which program matters. Both "show how code works," but at different scales for different purposes.

When to Use LLMs vs. Data Lineage Platforms

The right tool depends on the question you're trying to answer. Use this table to identify whether your challenge calls for an LLM, a data lineage platform, or both.

Notice the pattern: LLMs shine when you've already identified the code in question. Zengines shines when you need to find or trace logic across an unknown scope.

LLM vs. Data Lineage Platform: Feature Comparison

Beyond specific use cases, it helps to understand how these tools differ in design and outcomes. This comparison highlights what each tool is built for—and where each falls short.

How to Use LLMs and Data Lineage Together

Successful enterprise modernization initiatives use both tools strategically. Here's the workflow that works:

- Zengines discovers scope: "Find all programs touching customer credit calculation" — returns 47 programs with actual calculation logic extracted.

- Zengines diagnoses issues: "Why do System A and System B produce different results?" — shows where logic diverges across programs.

- LLM accelerates implementation: Take specific programs identified by Zengines and use an LLM to explain details, generate Java equivalents, and create tests.

- Zengines validates completeness: Prove to auditors that the initiative covered all logic paths and transformations.

Why Teams Confuse LLMs with Data Lineage Tools

Many teams successfully use LLMs to port known programs and assume this scales to enterprise-wide COBOL modernization. The confusion happens because:

- 80% of programs may be straightforward — well-documented, isolated, known scope.

- LLMs work great on this 80% — fast translation, helpful explanations.

- The 20% with hidden complexity stops initiatives — cross-program dependencies, undocumented business rules, conditional logic spread across multiple files.

Teams don't realize they have a system-level problem until deep into the initiative when they discover programs or dependencies they didn't know existed.

The Bottom Line: Choose Based on Your Problem

LLM code analysis and mainframe data lineage platforms solve different problems:

- LLMs excel at code-level interpretation and generation for known programs.

- Data lineage platforms excel at system-scale discovery and extraction across thousands of programs.

The critical distinction isn't whether they can show you what code does—both can. The distinction is scale, discovery, and proof of completeness.

For enterprise mainframe modernization, regulatory compliance, and large-scale initiatives, you need both. Data lineage platforms like Zengines find what matters across your entire codebase and prove you didn't miss anything. LLMs then accelerate the mechanical work of understanding and translating what you found.

The question isn't "which tool should I use?", it's "which problem am I solving right now?".

See How Zengines Complements Your LLM Tools

If you're planning a mainframe modernization initiative, regulatory compliance project, or enterprise-wide code analysis, we'd love to show you how Zengines works alongside your existing LLM tools.

Schedule a demo to see our mainframe data lineage platform in action with your use case.

Subscribe to our Insights

.png)