CEO to CEO – Why You Need to Care about Data Conversion: It’s Your Reputation and Revenue

As a fellow SaaS CEO, I understand that building and delivering a positive reputation and brand drives revenue. On the surface, this sounds like a simple and relatively straightforward task: provide a good product, take care of your customers, and deliver on the promise you made to customers. What I discovered as an executive at Accenture is that no matter how capable your SaaS product is, if you can’t effectively and efficiently onboard a customer, the reputation and revenue from your product are at significant risk. This is why I co-founded Zengines.

The Onboarding Devil is in the Data Conversion Details

Data conversion is rarely the first concern for a CEO, but understanding how this seemingly “in the weeds detail” is a strategic risk for your revenue is the purpose of this blog. We conducted a survey, and two major items jumped out:

- 68% of customers say converting data from old systems is the #1 challenge for moving to new platforms.

- Over 90% said they are converting/consolidating data from multiple systems when they deploy a major new SaaS platform.

The “so what” is your reputation and revenue are at risk when you (or your partner) do not successfully onboard your customer’s required data to your SaaS platform to deliver the expected value to your customers. Three fundamental issues complicate the task of data conversion:

- Manual guesswork: Data from multiple systems must be mapped and aligned in format or structure to be directly imported to your SaaS platform, this is manual, slow, and laborious guesswork.

- Lack of experienced resources, especially when you need them: The skill sets and people required to accomplish this critical task are rare resources and are only scalable with some automation assistance.

- Lack of repeatability: Because data conversions are a detail-oriented task requiring contextual understanding, onboarding teams will recreate the wheel each time.

Why Data Conversion is a Good Task For AI

We started using AI at Zengines long before AI became the year's buzzword. Pattern recognition and anomaly identification are at the heart of the data conversion process, which is ideal for an AI-based tool. Zengines AI helps you accelerate and scale onboarding, whether your professional services teams, a Big Four consulting firm, or another partner is implementing your platform. Our system automates the data mapping and transformation rules, identifies anomalies, lets the experts confirm and augment the transformation changes required, and executes the data conversion to create a successful onboarding process.

Data Conversion Drives Revenue Generation

Every legacy platform integrated into your platform is more revenue for your company. This can’t happen without the ability to quickly understand, move, and convert your customer’s data. Whether you get paid by the number of users (Salesforce.com), the number of transactions (Zapier), the number of modules implemented (HubSpot), or the amount of data you manage (Splunk), your revenue growth is limited if the data in legacy systems is not converted to your platform. This is why data conversion drives revenue generation.

Zengines AI-Driven Data Conversion to Accelerate Onboarding

Zengines helps your company deliver your value faster by accelerating onboarding, optimizing your professional services teams, and adding repeatability to the process.

- Accelerate Time to Value - Zengines is an end-to-end data conversion solution that gets the job of data conversion done so customers get to value faster.

- AI-based Data Conversion is 6X Faster - Zengines AI-based automation is 6X faster than traditional CSV file-based data conversion methods.

- Scale Your Limited Resources – Zengines makes data conversion a highly repeatable process to scale your team and optimize resources.

- Optimize and Automate Onboarding – Zengines AI automates the data conversion, including profiling, mapping, loading, and testing data conversion to optimize onboarding.

Get started with Zengines

As CEO, your priority is delivering value to your customer in the best way to enhance your company’s reputation and top- and bottom-line. In that light, this is question you should be asking when it comes to data conversions: is your customer onboarding fast, and repeatable? Zengines is here to achieve that with you.

You may also like

For Chief Risk Officers and Chief Actuaries at European insurers, Solvency II compliance has always demanded rigorous governance over how capital requirements get calculated. But as the framework evolves — with Directive 2025/2 now in force and Member States transposing amendments by January 2027 — the bar for data transparency is rising. And for carriers still running actuarial calculations, policy administration, or claims processing on legacy mainframe or AS/400s, meeting that bar gets harder every year.

Solvency II isn't just about holding enough capital. It's about proving you understand why your models produce the numbers they do — where the inputs originate, how they flow through your systems, and what business logic transforms them along the way. For insurers whose critical calculations still run on legacy languages like COBOL or RPG, that proof is becoming increasingly difficult to produce.

What Solvency II Actually Requires of Your Data

At its core, Solvency II's data governance requirements are deceptively simple. Article 82 of the Directive requires that data used for calculating technical provisions must be accurate, complete, and appropriate.

The Delegated Regulation (Articles 19-21 and 262-264) adds specificity around governance, internal controls, and modeling standards. EIOPA's guidelines go further, recommending that insurers implement structured data quality frameworks with regular monitoring, documented traceability, and clear management rules.

In practice, this means insurers need to demonstrate:

- Data traceability: A clear, auditable path from source data through every transformation to the final regulatory output — whether that's a Solvency Capital Requirement calculation, a technical provision, or a Quantitative Reporting Template submission.

- Calculation transparency: How does a policy record become a reserve estimate? What actuarial assumptions apply, and where do they come from?

- Data quality governance: Structured frameworks with defined roles, KPIs, and continuous monitoring — not just point-in-time checks during reporting season.

- Impact analysis capability: If an input changes, what downstream calculations and reports are affected?

For modern cloud-based platforms with well-documented APIs and metadata catalogs, these requirements are manageable. But for the legacy mainframe or AS/400 systems that still process the majority of core insurance transactions at many European carriers, this level of transparency requires genuine investigation.

The Legacy System Problem That Keeps Getting Worse

Many large European insurers run core business logic on mainframe or AS/400 systems that have been evolving for 30, 40, even 50+ years. Policy administration, claims processing, actuarial calculations, reinsurance — the systems that generate the numbers feeding Solvency II models were often written in COBOL by engineers who retired decades ago.

The documentation hasn't kept pace. In many cases, it was never comprehensive to begin with. Business rules were encoded directly into procedural code, updated incrementally over the years, and rarely re-documented after changes. The result is millions of lines of code that effectively are the documentation — if you can read them.

This creates a compounding problem for Solvency II compliance:

When supervisors or internal audit ask how a specific reserve calculation works, or where a risk factor in your internal model originates, the answer too often requires someone to trace it through the code manually. That trace depends on a shrinking pool of specialists who understand legacy COBOL systems — specialists who are increasingly close to retirement across the European insurance industry.

Every year the knowledge gap widens. And every year, the regulatory expectations for data transparency increase.

The Regulatory Pressure Is Intensifying

The Solvency II framework isn't standing still. The amending Directive published in January 2025 introduces significant updates that amplify data governance demands:

- Enhanced ORSA requirements now mandate analysis of macroeconomic scenarios and systemic risk conditions — requiring even more data inputs with clear provenance.

- Expanded reporting obligations split the Solvency and Financial Condition Report into separate sections for policyholders and market professionals, each requiring precise, auditable data.

- New audit requirements mandate that the balance sheet disclosed in the SFCR be subject to external audit — increasing scrutiny on the data chain underlying reported figures.

- Climate risk integration requires insurers to assess and report on climate-related financial risks, adding new data dimensions that must be traceable through existing systems.

National supervisors across Europe — from the ACPR in France to BaFin in Germany to the PRA in the UK — are tightening their expectations in parallel. The ACPR, for instance, has been specifically increasing its focus on the quality of data used by Solvency II functions, requiring actuarial, risk management, and internal audit teams to demonstrate traceability and solid evidence.

And the consequences of falling short are becoming tangible. Pillar 2 capital add-ons, supervisory intervention, and in severe cases, questions about the suitability of responsible executives — these aren't theoretical outcomes. They're tools that European supervisors have demonstrated willingness to use.

The Supervisory Fire Drill

Every CRO at a European insurer knows the scenario: a supervisor asks a pointed question about how a specific technical provision was calculated, or requests that you trace a data element from source through to its appearance in a QRT submission. Your team scrambles. The mainframe or AS/400 specialists — already stretched thin — get pulled from other work. Days or weeks pass before the answer materializes.

These examinations are becoming more frequent and more granular. Supervisors aren't just asking for high-level descriptions of data flows. They want attribute-level traceability. They want to see the actual business logic that transforms raw policy data into the numbers in your regulatory reports.

For carriers whose critical processing runs through legacy mainframe or AS/400s, these requests expose a fundamental vulnerability: institutional knowledge that exists only in people's heads, supported by code that only a handful of specialists can interpret.

The question isn't whether your supervisor will ask. It's whether you'll be able to answer confidently when they do.

Extracting Lineage from Legacy Systems

The good news: you don't have to replace your entire core system to solve the transparency problem. AI-powered tools can now parse legacy codebases and extract the data lineage that's been locked inside for decades.

This means:

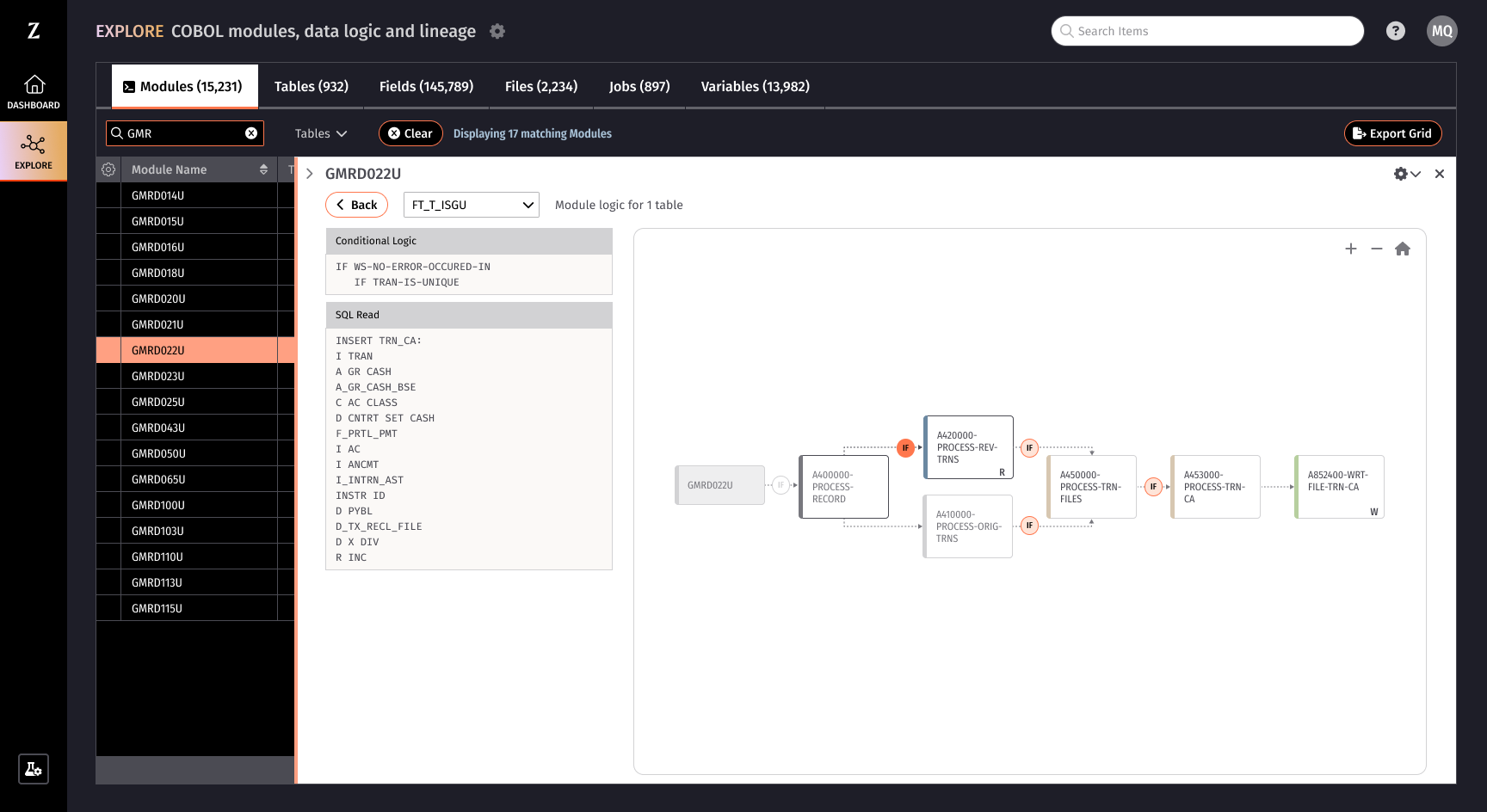

- Automated tracing of how data flows through COBOL and RPG modules, job schedulers, and database operations — across thousands of programs, without needing to know where to look.

- Calculation logic extraction that reveals the actual mathematical expressions and business rules governing how risk data gets transformed — not just that Field A maps to Field B, but what happens during that transformation.

- Visual mapping of branching conditions and downstream dependencies, so compliance teams can answer supervisor questions in hours instead of weeks.

- Preserved institutional knowledge that doesn't walk out the door when your legacy specialists retire — because the logic is documented in a searchable, auditable format.

The goal isn't to decommission your legacy systems overnight. It's to shine a light into the black box — so you can demonstrate the governance and control that Solvency II demands over systems that still run your most critical functions.

From Compliance Burden to Strategic Advantage

The European insurers who navigate Solvency II most smoothly aren't necessarily the ones with the newest technology. They're the ones who can clearly articulate how their risk management processes work — including the parts that run on infrastructure built before many of today's actuaries were born.

That clarity doesn't require a multi-year transformation program. It requires the ability to extract and document what your systems already do, in a format that satisfies both internal governance requirements and supervisory scrutiny.

For CROs, Chief Actuaries, and compliance leaders managing legacy technology estates, that capability is rapidly moving from nice-to-have to essential — especially as the 2027 transposition deadline for the amended Solvency II Directive approaches.

The carriers that invest in legacy system transparency now won't just be better prepared for their next supervisory review. They'll have a foundation for every modernization decision that follows — because you can't confidently change what you don't fully understand.

Zengines helps European insurers extract data lineage and calculation logic from legacy mainframe or AS/400 systems. Our AI-powered platform parses COBOL and RPG code and related infrastructure to deliver the transparency that Solvency II demands — without requiring a rip-and-replace modernization.

Every data migration has a moment of truth — when stakeholders ask, "Is everything actually correct in the new system?" Most teams don’t have the tools they need to answer that question.

Data migrations consume enormous time and budget. But for many organizations, the hardest part isn't moving the data — it's proving it arrived correctly. Post-migration reconciliation is the phase where confidence is either built or broken, where regulatory obligations are met or missed, and where the difference between a successful go-live and a costly rollback becomes clear.

For enterprises in financial services — and the consulting firms guiding them through modernization — reconciliation isn't optional. The goal of any modernization, vendor change, or M&A integration is value realization — and reconciliation is the bookend that proves the change worked, giving stakeholders and regulators the confidence to move forward.

The Reconciliation Gap

Most migration programs follow a familiar arc: assess the source data, map it to the target schema, transform it to meet the new system's requirements, load it, and validate. On paper, it's linear. In practice, the validation step is where many programs stall.

Here's why. Reconciliation requires you to answer a deceptively simple question: Does the data in the new system accurately represent what existed in the old one — and does it behave the same way?

That question has layers. At the surface level, it's a record count exercise — did all 2.3 million accounts make it across? But beneath that, reconciliation means confirming that values transformed correctly, that business logic was preserved, that calculated fields produce the same results, and that no data was silently dropped or corrupted in transit.

For organizations subject to regulatory frameworks like BCBS 239, CDD, or CIP, reconciliation also means demonstrating an auditable trail. Regulators don't just want to know that data moved — they want evidence that you understood what moved, why it changed, and that you can trace any value back to its origin.

Why Reconciliation Is So Difficult

Three factors make post-migration reconciliation consistently harder than teams anticipate.

- The source system is often a black box. When you're migrating off a legacy mainframe or a decades-old custom application, the business logic embedded in that system may not be documented anywhere. Interest calculations, fee structures, conditional processing rules — these live in COBOL modules, job schedulers, and tribal knowledge. You can't reconcile output values if you don't understand how they were originally computed.

- Transformation introduces ambiguity. Data rarely moves one-to-one. Fields get split, concatenated, reformatted, and coerced into new data types. A state abbreviation becomes a full state name. A combined name field becomes separate first and last name columns. Each transformation is a potential point of divergence, and without a systematic way to trace what happened, discrepancies become investigative puzzles rather than straightforward fixes.

- Scale makes manual verification impossible. A financial institution migrating off a mainframe might be dealing with tens of thousands of data elements spread across thousands of modules. Spot-checking a handful of records doesn't provide the coverage that stakeholders and regulators require. But exhaustive manual comparison across millions of records, hundreds of fields, and complex calculated values simply doesn't scale.

A Better Approach: Build Reconciliation Into the Migration, Not After It

The most effective migration programs don't treat reconciliation as a phase that happens at the end. They build verifiability into every step — so that by the time data lands in the new system, the evidence trail already exists.

This requires two complementary capabilities: intelligent migration tooling that tracks every mapping and transformation decision, and deep lineage analysis that surfaces the logic embedded in legacy systems so you actually know what "correct" looks like.

Getting the Data There — With Full Traceability

The mapping and transformation phase of any migration is where most reconciliation problems originate. When a business analyst maps a source field to a target field, applies a transformation rule, and moves on, that decision needs to be recorded — not buried in a spreadsheet that gets versioned twelve times.

AI-powered migration tooling can accelerate this phase significantly. Rather than manually comparing schemas side by side, pattern recognition algorithms can predict field mappings based on metadata, data types, and sample values, then surface confidence scores so analysts can prioritize validation effort where it matters most. Transformation rules — whether written manually or generated through natural language prompts — are applied consistently and logged systematically.

The result is that when a stakeholder later asks, "Why does this field look different in the new system?" — the answer is traceable. You can point to the specific mapping decision, the transformation rule that was applied, and the sample data that validated the match. That traceability is foundational to reconciliation.

Understanding What "Right" Actually Means — Legacy System Lineage

Reconciliation gets exponentially harder when the source system is a mainframe running COBOL code that was last documented in the 1990s. When the new system produces a different calculation result than the old one, someone has to determine whether that's a migration error or simply a difference in business logic between the two platforms.

This is where mainframe data lineage becomes critical. By parsing COBOL modules, job control language, SQL, and associated files, lineage analysis can surface the calculation logic, branching conditions, data paths, and field-level relationships that define how the legacy system actually works — not how anyone thinks it works.

Consider a practical example: after migrating to a modern cloud platform, a reconciliation check reveals that an interest accrual calculation in the new system produces a different result than the legacy mainframe. Without lineage, the investigation could take weeks. An analyst would need to manually trace the variable through potentially thousands of lines of COBOL code, across multiple modules, identifying every branch condition and upstream dependency.

With lineage analysis, that same analyst can search for the variable, see its complete data path, understand the calculation logic and conditional branches that affect it, and determine whether the discrepancy stems from a migration error or a legitimate difference in how the two systems compute the value. What took weeks now takes hours — and the finding is documented, not locked in someone's head.

Bringing Both Sides Together

The real power of combining intelligent migration with legacy lineage is that reconciliation becomes a structured, evidence-based process rather than an ad hoc investigation.

When you can trace a value from its origin in a COBOL module, through the transformation rules applied during migration, to its final state in the target system — you have end-to-end data provenance. For regulated financial institutions, that provenance is exactly what auditors and compliance teams need. For consulting firms delivering these programs, it's the difference between a defensible methodology and a best-effort exercise.

What This Means for Consulting Firms

For Tier 1 consulting firms and systems integrators delivering modernization programs, post-migration reconciliation is often where project timelines stretch and client confidence erodes. The migration itself may go seem to go smoothly, but then weeks of reconciliation cycles — investigating discrepancies, tracing values back through legacy systems, re-running transformation logic — consume budget and test relationships.

Tooling that accelerates both sides of this equation changes the engagement model. Migration mapping and transformation that would have taken a team months can be completed by a smaller team in weeks. Lineage analysis that would have required dedicated mainframe SMEs for months of manual code review becomes an interactive research exercise. And the reconciliation evidence is built into the process, not assembled after the fact.

This translates directly to engagement economics: faster delivery, reduced SME dependency, lower risk of costly rework, and a more compelling value proposition when scoping modernization programs.

Practical Steps for Stronger Reconciliation

Whether you're leading a migration internally or advising a client through one, these principles will strengthen your reconciliation outcomes.

- Start with lineage, not mapping. Before you map a single field, understand the business logic in the source system. What calculations are performed? What conditional branches exist? What upstream dependencies feed the values you're migrating? This upfront investment pays for itself many times over during reconciliation.

- Track every transformation decision. Every mapping, every transformation rule, every data coercion should be logged and traceable. When discrepancies surface during reconciliation — and they will — you need to be able to reconstruct exactly what happened to any given value.

- Profile data before and after. Automated data profiling at both the source and target gives you aggregate-level validation — record counts, completeness rates, value distributions, data type consistency — before you ever get to record-level comparison. This is your first line of defense and often catches systemic issues early.

- Don't treat reconciliation as pass/fail. Not every discrepancy is an error. Some reflect intentional business logic differences between old and new systems. The goal isn't zero discrepancies — it's understanding and documenting every discrepancy so stakeholders can make informed decisions about go-live readiness.

- Build for repeatability. If your organization does migrations frequently — onboarding new clients, integrating acquisitions, switching vendors — your reconciliation approach should be systematized. What you learn from one migration should make the next one faster and more reliable.

The goal of any modernization program isn't the migration itself — it's the value that comes after. Faster operations, better insights, reduced risk, regulatory confidence. Reconciliation is the bookend that earns trust in the change and clears the path to that value.

Zengines combines AI-powered data migration with mainframe data lineage to give enterprises and consulting firms full traceability from source to target — so you can prove the migration worked and move forward with confidence.

Mainframes aren't going anywhere overnight. Despite the industry's push toward cloud migration and modernization, the reality is that many financial institutions still rely on mainframe systems to process millions of daily transactions, calculate interest accruals, manage account records, and run core business operations. And they will for years to come.

Modernization is the eventual reality for every organization still running on mainframe. But "eventual" is doing a lot of heavy lifting in that sentence. For many financial institutions, a full modernization effort is on the roadmap but years away — dependent on budget cycles, vendor timelines, regulatory considerations, and a hundred other competing priorities. In the meantime, these systems still need to be maintained — and that's where things get increasingly risky.

The hidden cost of "just making a change"

When a business requirement changes — say, a new regulation requires a different calculation methodology, or a product team needs to update how accrued interest is computed — someone has to go into the mainframe and update the code. Sounds straightforward enough. Except it's not.

Mainframe COBOL codebases are often decades old. They've been written, rewritten, and patched by generations of engineers, many of whom have long since left the organization. A single mainframe environment can contain tens of thousands of COBOL modules, each with hundreds or thousands of lines of code. Variables branch across modules. Tables are read and updated in ways that aren't always documented. Conditional logic sends data down different paths depending on record types, dates, or account classifications that may have made perfect sense in 1998 but aren't intuitive to anyone working today.

Before a mainframe engineer can write a single new line of code, they need to answer a deceptively simple question: What will this change affect?

And answering that question — tracing a variable backward through modules, understanding which tables get updated, identifying upstream and downstream dependencies — can take weeks or even months of manual investigation. One engineer we've worked with estimated that investigating the impact of a change takes substantially longer than actually making the change.

Why mainframe management feels like navigating a black box

The term "black box" gets used a lot in mainframe conversations, and for good reason. The challenge isn't that the code doesn't work — it usually works remarkably well. The challenge is that nobody fully understands how and why it works the way it does.

Consider what a typical investigation looks like without modern tooling. An engineer receives a request from the business: "We need to update how we calculate X." To comply, that engineer has to:

- Determine a relevant starting point for researching “X”, which may be a business term or a system term. This starting point, for example, could be a system variable in a frequently accessed COBOL module

- Open the relevant COBOL module (which might be thousands of lines long)

- Find and trace the variable in question through the code

- Identify every table and field it touches

- Follow it across modules when it gets called or referenced elsewhere, keeping track of pathways where the variable may take on a new name

- Map out conditional branching logic that might treat the variable differently based on account type, date ranges, or other factors

- Determine which downstream processes depend on the output

- Document all of this before they can even begin to assess whether the change is safe to make

Now multiply that by the reality that a single environment might have 50,000 to 500,000 to 5,000,000 modules. It's not hard to see why organizations describe their mainframe as a black box — and why changes feel so high-stakes.

The real risk: unintended consequences

The fear isn't hypothetical. When an engineer updates a module without fully understanding the dependencies, the consequences can ripple across systems. A calculation that looked isolated might feed into downstream reporting. A field that seemed unused might actually be read by another module under specific conditions. A change to one branch of conditional logic might alter outputs for an account type that wasn't part of the original requirement.

These kinds of unintended consequences don't always surface immediately. Sometimes they show up in reconciliation discrepancies weeks later. Sometimes a client calls and says, "My statement looks different this month." By that point, the investigation to find the root cause is just as painful as the original change — if not more so.

This is why many mainframe teams default to a conservative posture. They move slowly, pad timelines, and layer in extensive manual review. Not because they aren't skilled, but because the risk of getting it wrong is too high and the tools available to them haven't evolved with the complexity of the systems they manage.

A better approach: data lineage for mainframe management

This is where mainframe data lineage changes the equation. Rather than manually tracing code paths and building dependency maps from scratch every time a change is requested, data lineage technology can parse COBOL modules at scale and generate a comprehensive, searchable view of how data flows through the system.

With data lineage in place, that same engineer who used to spend months investigating a change can now:

- Search for a specific variable, table, or field and immediately see every module that reads, writes, or updates it

- Trace the data path forward and backward to understand exactly where a value originates and where it ends up

- View calculation logic to understand the mathematical expressions and business rules embedded in the code

- Identify conditional branching to see where and why data gets treated differently based on record types or other criteria

- Understand cross-module dependencies to assess the full blast radius of a proposed change before making it

Instead of navigating thousands of lines of raw COBOL to answer a single question, the engineer gets a curated, structured view of exactly the information they need. The investigation that used to take months can happen in minutes.

Not just for modernization day — for every day between now and then

Much of the conversation around mainframe data lineage focuses on migration and modernization. And yes, lineage is critical for those efforts — but the value starts long before modernization kicks off.

Every time a business requirement changes, every time a regulation is updated, every time an engineer needs to write or modify code — they're navigating the same black box. Data lineage doesn't just prepare you for the future. It makes your mainframe safer and more manageable right now, during the months or years between today and the day you're ready to modernize.

For mainframe teams, it means less time investigating and more time executing. For risk and compliance leaders, it means greater confidence that changes won't introduce unintended consequences. For the business, it means faster turnaround on change requests without increasing operational risk.

And when modernization day does arrive, you'll be ready

Here's the other advantage of investing in data lineage now: when your organization is ready to modernize, you won't be starting from scratch.

Modernization isn't just about moving everything from the old system to the new one. It requires making deliberate decisions about what to bring forward and what to leave behind. Which business rules are still relevant? Which calculations need to be replicated exactly, and which should be redesigned? Which data paths reflect current requirements, and which are artifacts of decisions made decades ago?

Without lineage, those questions send teams back into the same manual investigation cycle — except now they're doing it across tens of thousands of modules under the pressure of a migration timeline. With lineage already in place, your team walks into modernization with a comprehensive understanding of how the current system works, what it does, and why.

And the value doesn't stop at cutover. Post-migration, lineage gives you a baseline for reconciliation. When the new system produces a different output than the old one — and it will — lineage helps you trace back to the original logic and understand why the results differ. Was it an intentional change? A missed business rule? A calculation that was carried over incorrectly? Instead of guessing, your team can pinpoint the source of the discrepancy and resolve it with confidence.

The mainframe isn't the problem. The lack of visibility is.

Organizations that rely on mainframes aren't behind — they're running proven, reliable infrastructure that processes critical transactions every day. The challenge has never been the mainframe itself. It's that the tools and processes for understanding what's inside it haven't kept pace with the complexity of the systems or the speed at which the business needs to evolve.

Data lineage closes that gap. Whether modernization is two years away or five, understanding what's inside the black box isn't something you can afford to wait on. Your teams need that visibility today to manage changes safely — and they'll need it even more when the time comes to move forward.

Zengines' Mainframe Data Lineage solution parses COBOL code at scale to give your team searchable, visual access to the data paths, calculation logic, dependencies, and business rules embedded in your mainframe.

Subscribe to our Insights

.png)